Ubuntu 14.04 64bit + Caffe rc3 + CUDA 7.5 + Intel MKL 配置说明

本步骤经笔者亲身实践,集百家所长,能实现Caffe在NVIDIA GPU下进行计算。

安装开发所需的依赖包

安装开发所需要的一些基本包

1 | sudo apt-get install build-essential # basic requirement |

安装CUDA及驱动

准备工作

下文中所有资源可在百度云 链接:http://pan.baidu.com/s/1dEXPg3J 密码:v19o 得到。

在关闭桌面管理 lightdm 的情况下安装驱动似乎可以实现Intel 核芯显卡 来显示 + NVIDIA 显卡来计算。具体步骤如下:

首先在BIOS设置里选择用Intel显卡来显示或作为主要显示设备

进入Ubuntu, 按 ctrl+alt+F1 进入tty, 登录tty后输入如下命令

1

sudo service lightdm stop

该命令会关闭lightdm。如果你使用 gdm或者其他的desktop manager, 请在安装NVIDIA驱动前关闭他。

下载deb包及安装CUDA

使用deb包安装CUDA及驱动能省去很多麻烦(参见CUDA Starting Guide)。下载对应于你系统的CUDA deb包, 然后用下列命令添加软件源

1 | sudo dpkg -i cuda-repo-<distro>_<version>_<architecture>.deb |

然后用下列命令安装CUDA

1 | sudo apt-get install cuda |

安装完成后 reboot.

1 | sudo reboot |

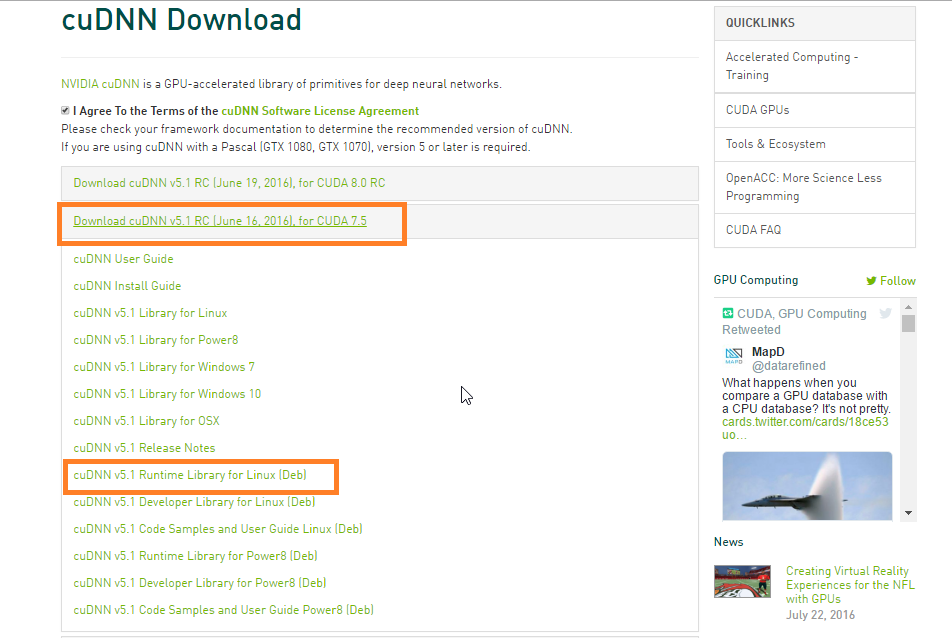

安装cuDNN

(03-25: 今天下最新的caffe回来发现编译不过啊一直CUDNN报错浪费了我几个小时没搞定! 后来才发现caffe15小时前的更新开始使用cudnn v2, 但是官网上并没有明显提示!!! 坑爹啊!)

cuDNN能加速caffe中conv及pooling的计算。首先下载cuDNN,需要注册。或通过在百度云 链接:http://pan.baidu.com/s/1dEXPg3J 密码:v19o 得到。

下载完成后,然后执行下列命令解压并安装

1 | # 需注意,切换到你自己存放 cudnn-7.5-linux-x64-v5.1-rc.tgz 文件的文件夹下进行解压 |

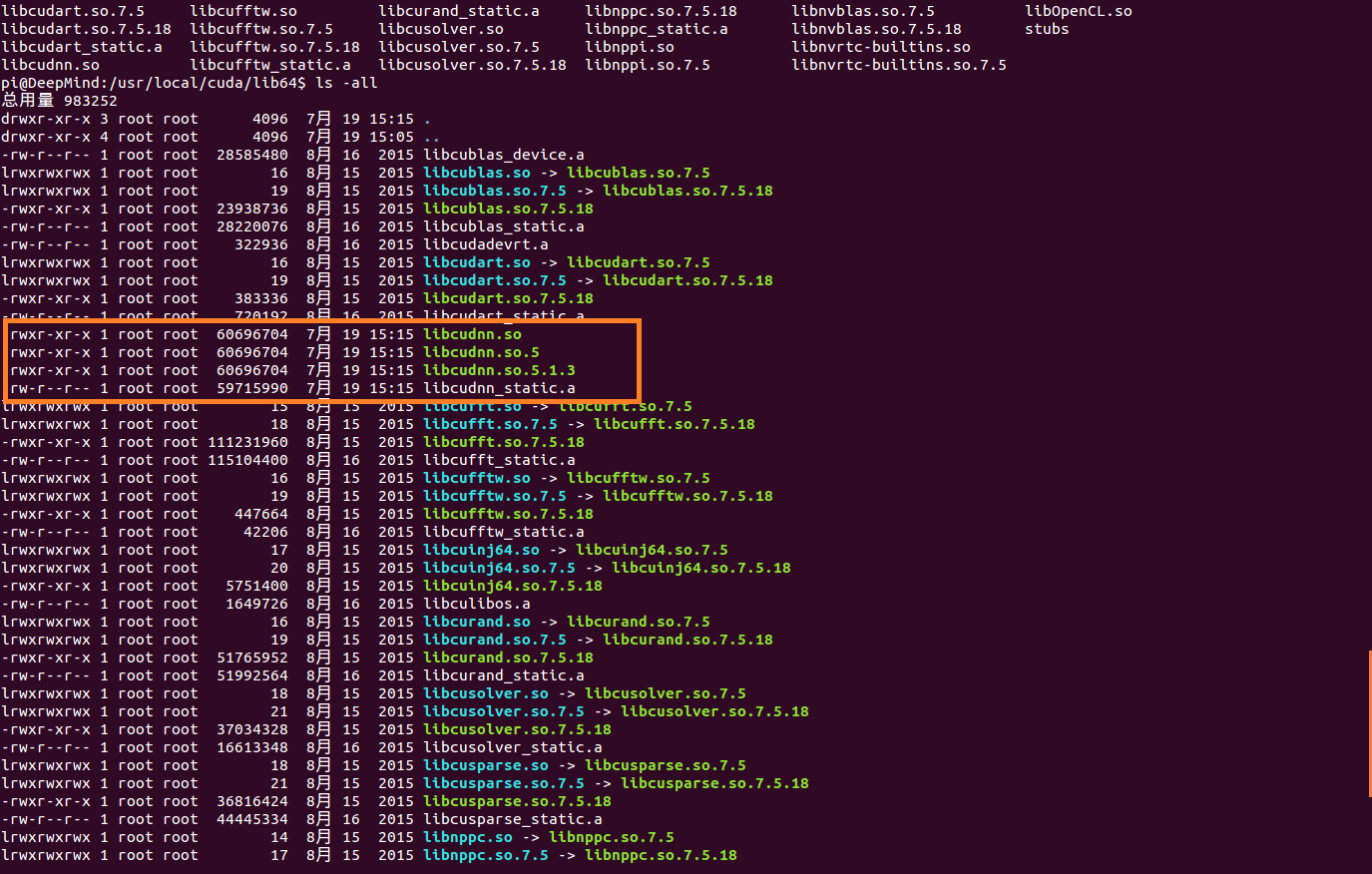

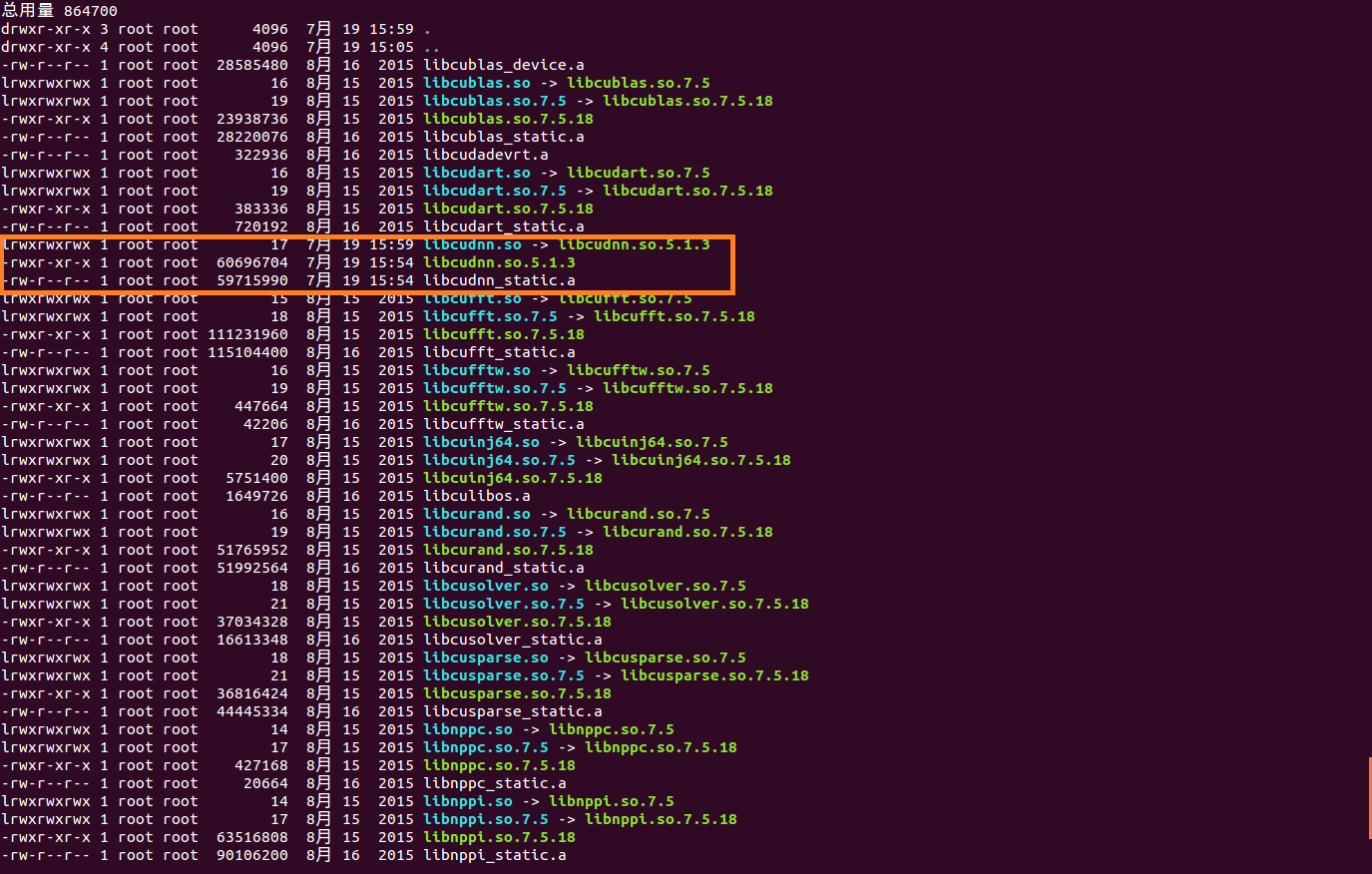

更新软链接

1 | cd /usr/local/cuda/lib64/ |

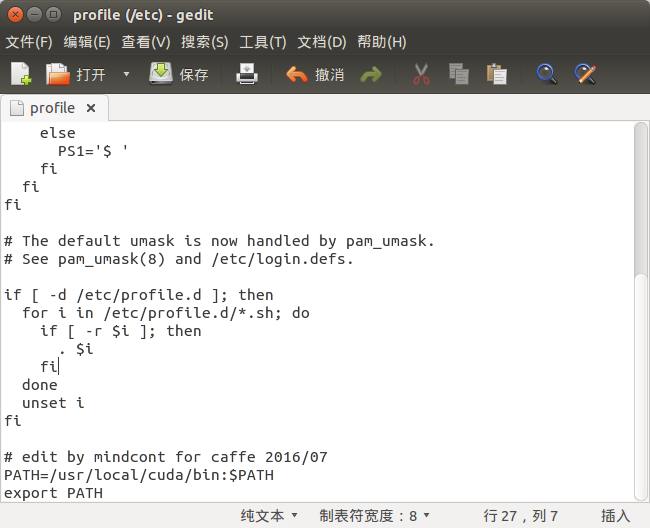

设置环境变量

安装完成后需要在/etc/profile中添加环境变量,

1 | sudo gedit /etc/profile |

在文件最后添加:

1 | PATH=/usr/local/cuda/bin:$PATH |

保存后, 执行下列命令, 使环境变量立即生效

1 | source /etc/profile |

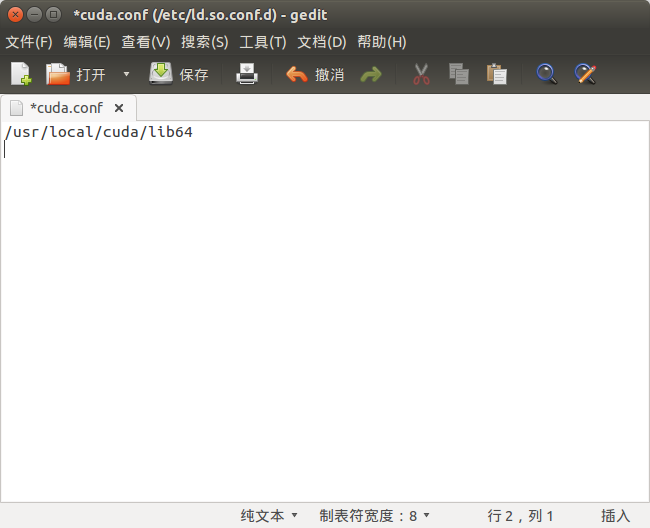

同时需要添加lib库路径: 在 /etc/ld.so.conf.d/加入文件 cuda.conf, 内容如下

1 | /usr/local/cuda/lib64 |

保存后,执行下列命令使之立刻生效

1 | sudo ldconfig |

测试CUDA

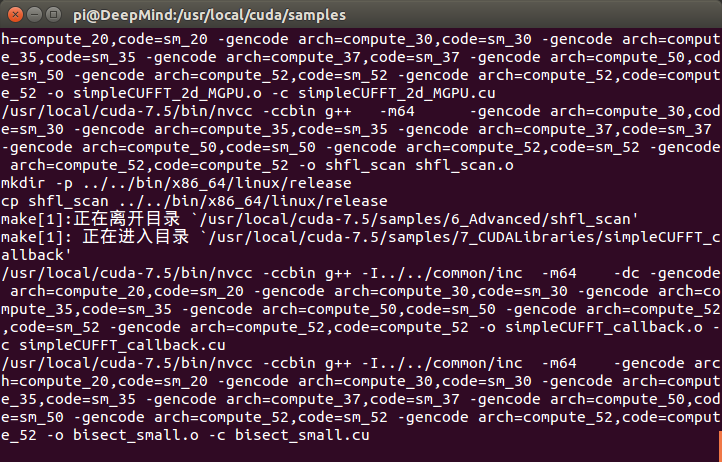

进入/usr/local/cuda/samples, 执行下列命令来build samples

1 | sudo make all -j4 |

编译过程

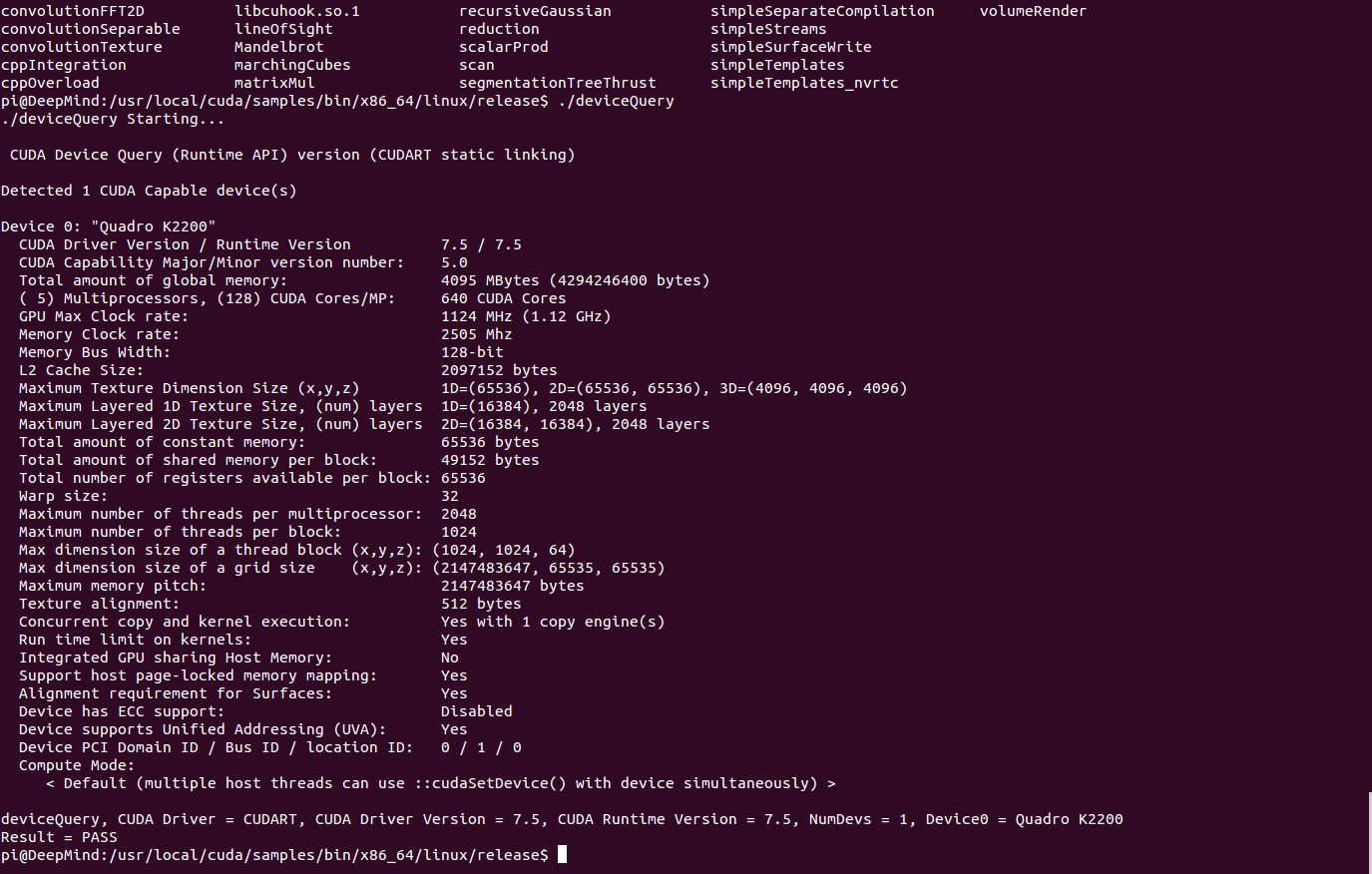

整个过程大概10分钟左右, 全部编译完成后, 进入 samples/bin/x86_64/linux/release, 运行deviceQuery

1 | ./deviceQuery |

如果出现显卡信息, 则驱动及显卡安装成功:

1 | pi@DeepMind:/usr/local/cuda/samples/bin/x86_64/linux/release$ ./deviceQuery |

安装Intel MKL 或Atlas

如果没有Intel MKL, 可以用下列命令安装免费的atlas

1 | sudo apt-get install libatlas-base-dev |

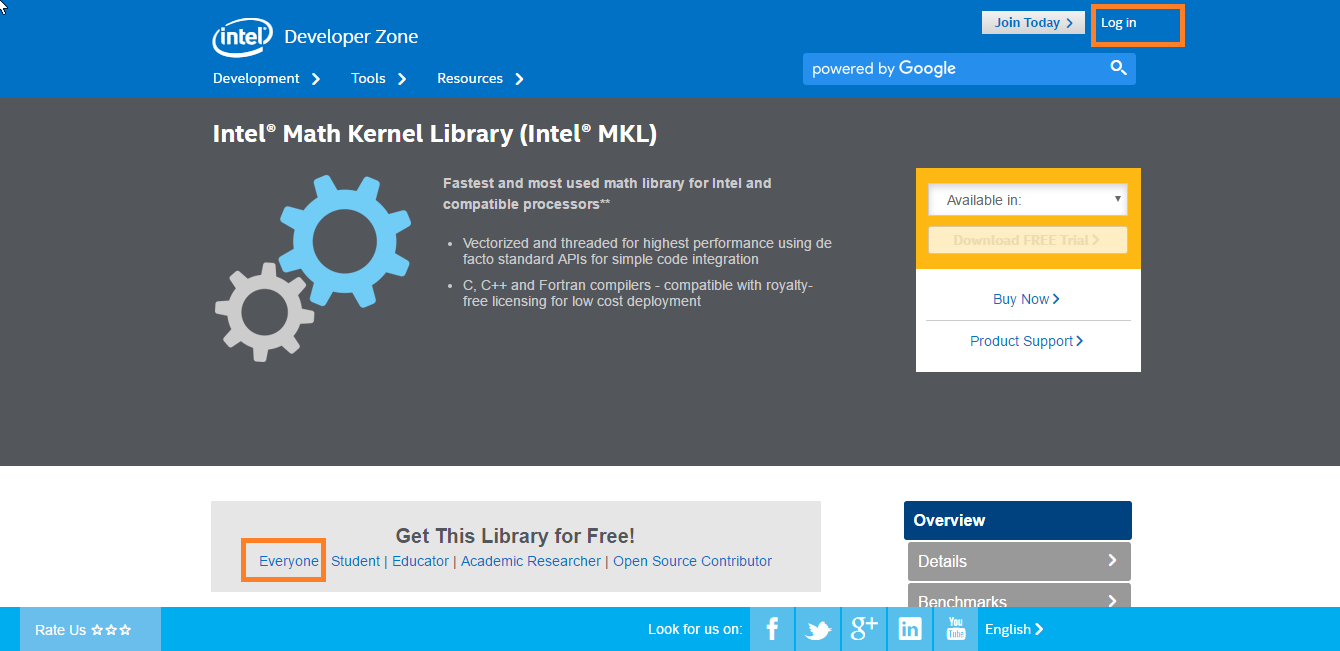

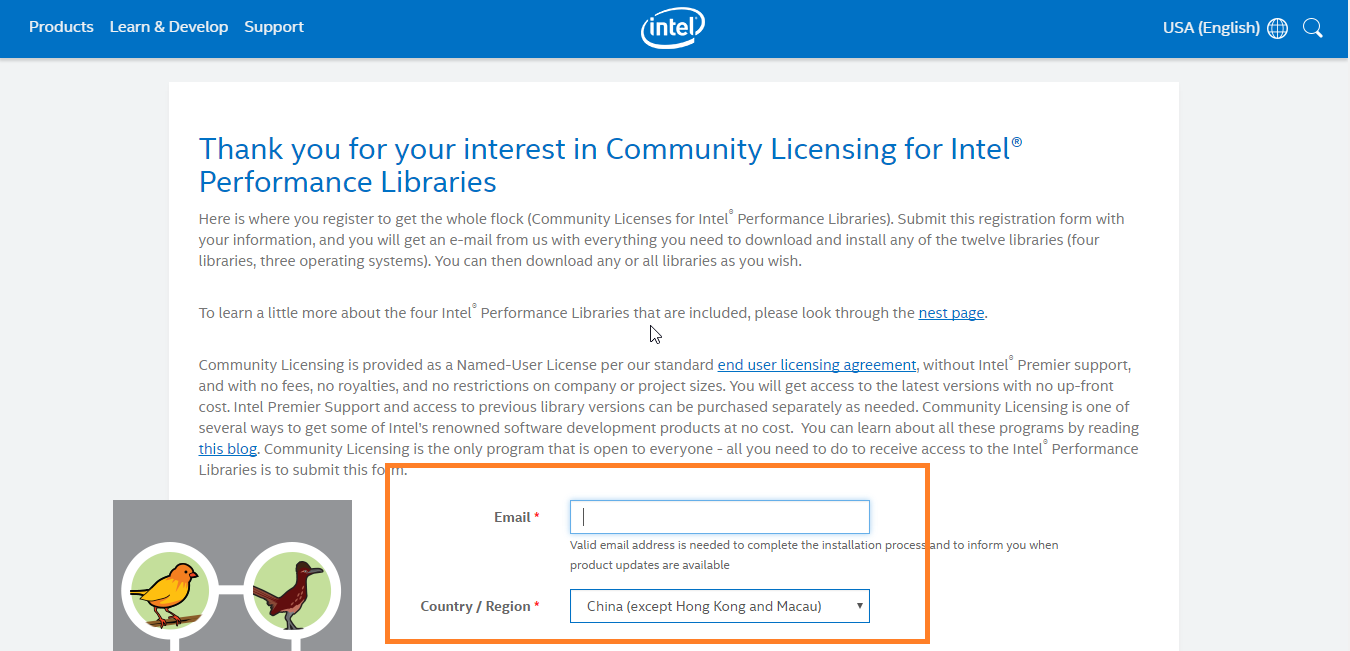

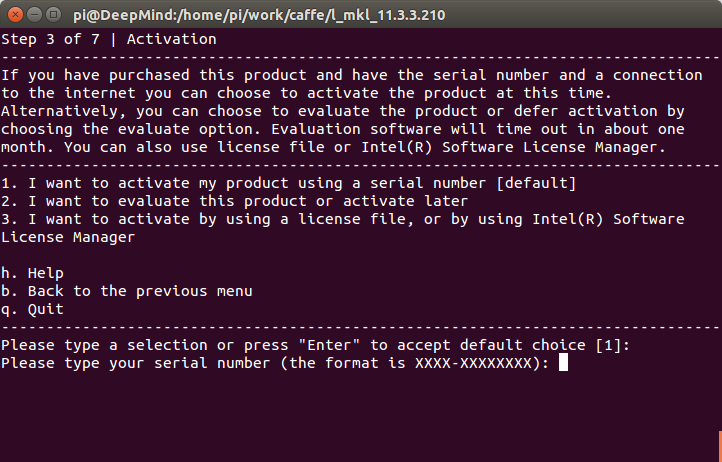

如果有mkl安装包Intel® MKL需注册后会发下载链接和激活码到注册邮箱。

先注册,后再登录到此界面,选择’Get This Library for Free!’->’Everyone’。

选择社区版本Licensing

填写信息后,会下载链接和激活码到你注册邮箱。

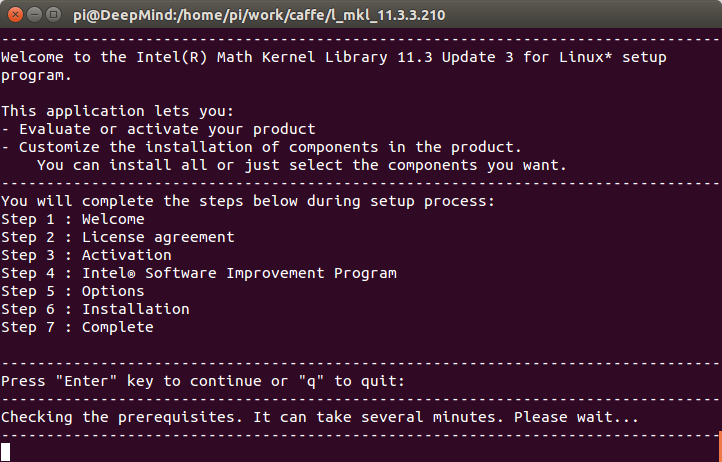

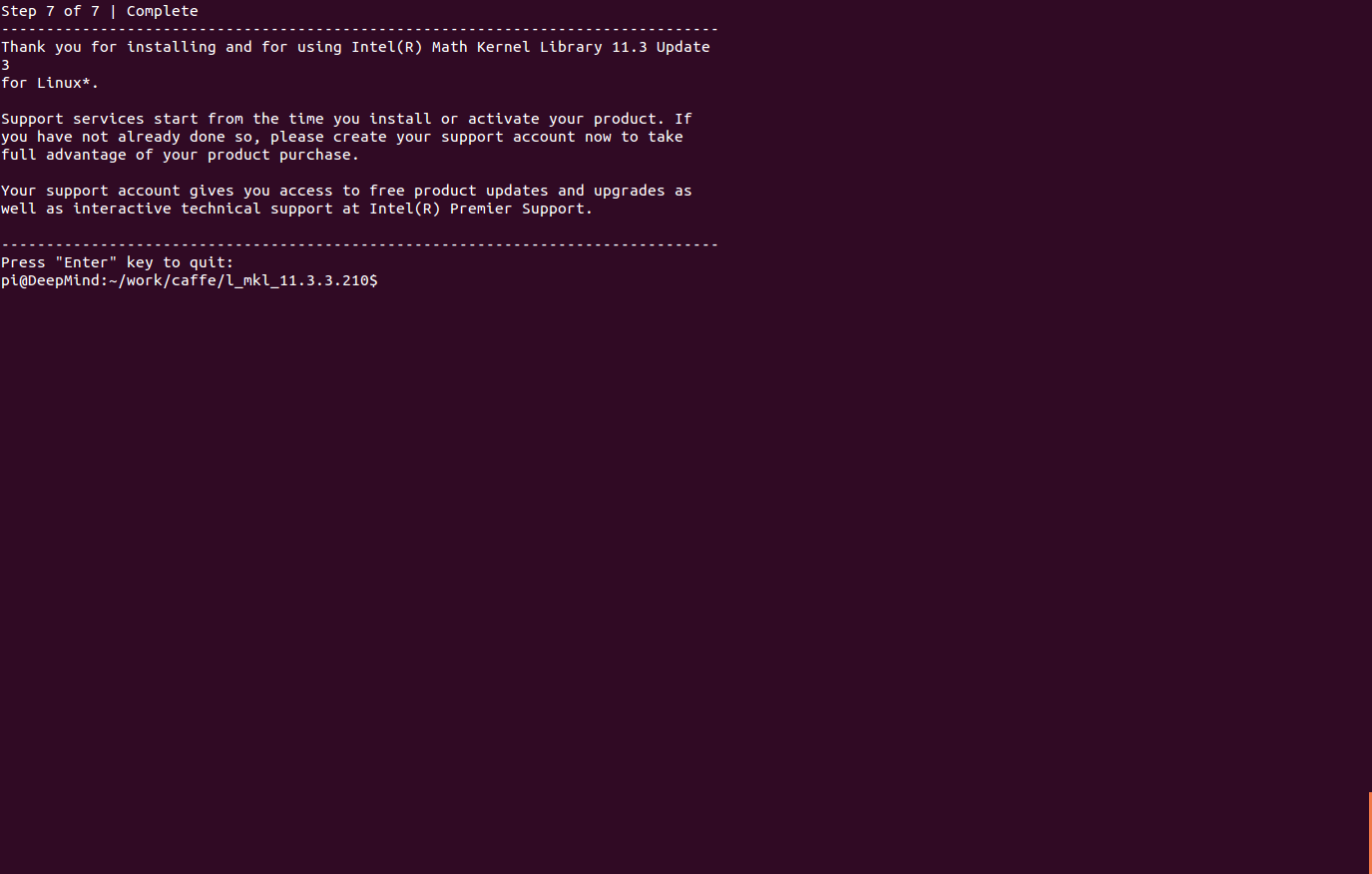

首先解压安装包,下面有一个install_GUI.sh文件, 执行该文件,会出现图形安装界面,根据说明一步一步执行即可。

1 | tar -zxvf l_mkl_11.3.3.210.tgz |

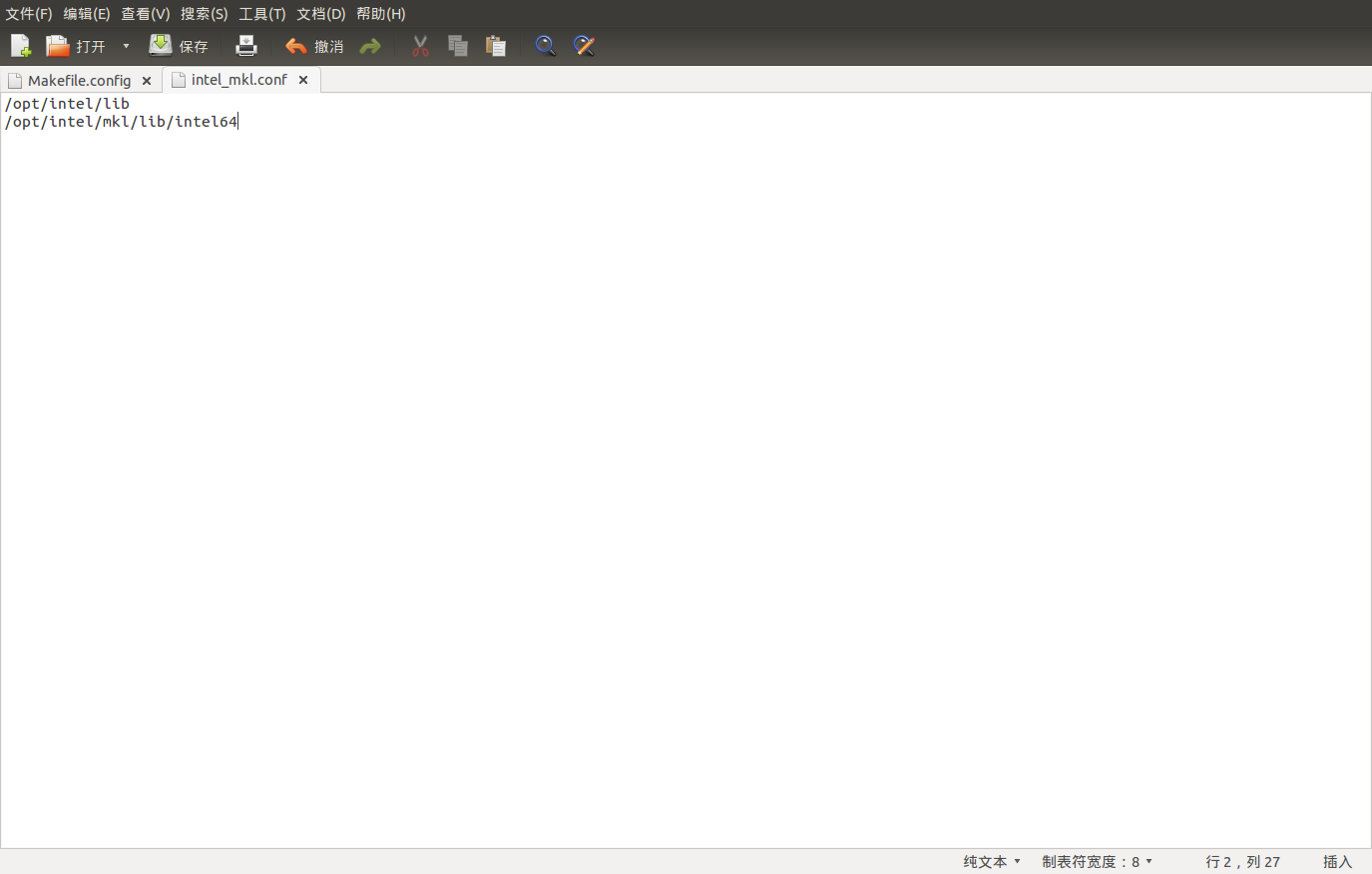

注意: 安装完成后需要添加library路径, 创建/etc/ld.so.conf.d/intel_mkl.conf文件, 在文件中添加内容

1 | /opt/intel/lib/intel64 |

注意把路径替换成自己的安装路径。 编辑完后执行

1 | sudo ldconfig |

安装OpenCV (Optional, 如果运行caffe时opencv报错, 可以重新按照此步骤安装)

参见我的另一篇博客Ubuntu 14.04安装 OpenCV 2.4.9

安装Caffe所需要的Python环境

1 | sudo apt-get install python-numpy python-scipy python-matplotlib python-sklearn python-skimage python-h5py python-protobuf python-leveldb python-networkx python-nose python-pandas python-gflags Cython ipython python-pydot |

安装MATLAB

Caffe提供了MATLAB接口,有需要用MATLAB的同学可以额外安装MATLAB。安装教程同Windows 下类似,首先下载 MATLAB for Linux、解压。

1 | sudo sh ./install.sh |

弹出图形界面,之后同在Windows下一样进行破解激活。windows下安装和激活 Matlab参加我的另一篇博客Matlab连接Kinect V2 。安装完成后可添加图标

1 | sudo vi /usr/share/applications/Matlab.desktop |

输入以下内容

1 | [Desktop Entry] |

这里我的Matlab安装位置为默认,如下所示

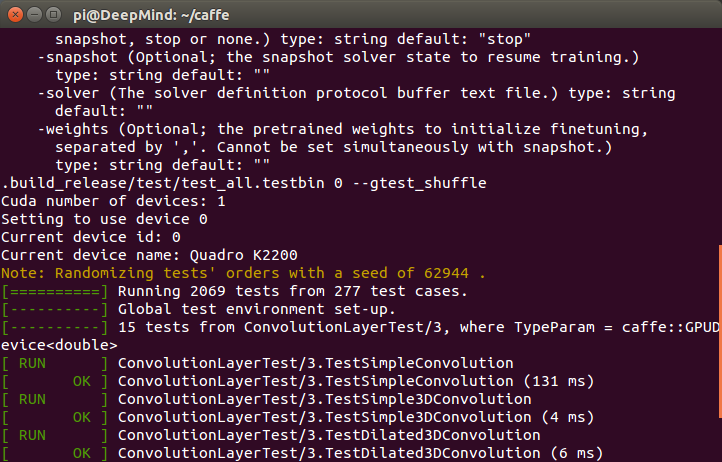

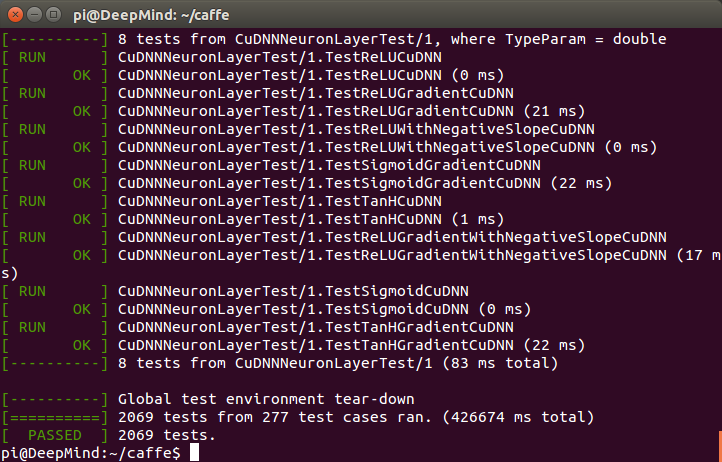

编译Caffe

编译主程序

终于完成了所有环境的配置,可以愉快的编译Caffe了!需要注意的是,这里我采用的是caffe-rc3.zip(on 30 Jan 2016)。 进入caffe根目录, 首先复制一份Makefile.config,

1 | cp Makefile.config.example Makefile.config |

然后修改里面的内容,主要需要修改的参数包括

- CPU_ONLY 是否只使用CPU模式,没有GPU没安装CUDA的同学可以打开这个选项

- BLAS (使用intel mkl还是atlas)

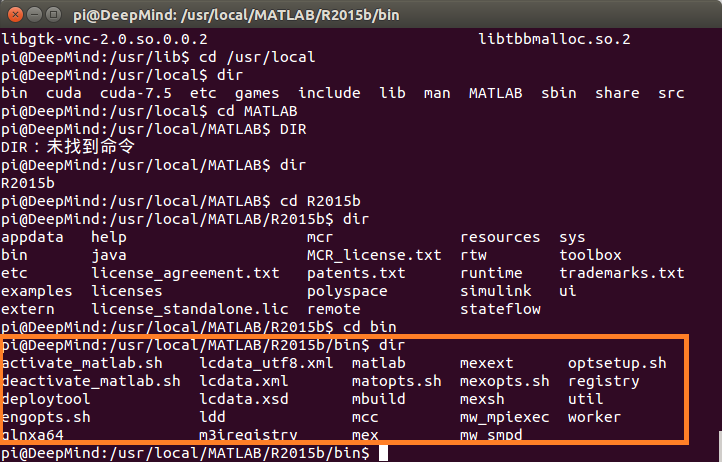

- MATLAB_DIR 如果需要使用MATLAB wrapper的同学需要指定matlab的安装路径, 如我的路径为

/usr/local/MATLAB/R2015b(注意该目录下需要包含bin文件夹,bin文件夹里应该包含mex二进制程序) - DEBUG 是否使用debug模式,打开此选项则可以在eclipse或者NSight中debug程序

- CUDA_ARCH 可根据你自己显卡对应的计算力改相应的 -gencode arch=compute_xx,code=compute_xx 。例如我的显卡是 NVIDIA K2200 对应的计算力是 5.0,所以我相应的设置为 -gencode arch=compute_50,code=compute_50

这里是我的配置:

1 | ## Refer to http://caffe.berkeleyvision.org/installation.html |

完成设置后, 开始编译

1 | make all -j4 |

注意 -j4 是指使用几个线程来同时编译, 可以加快速度, j后面的数字可以根据CPU core的个数来决定, 我的CPU使4核, 所以-j4.

编译Matlab wrapper

执行如下命令

1 | make matcaffe |

然后就可以跑官方的matlab demo啦。

编译Python wrapper

1 | make pycaffe |

注意: 这里生成caffe 的 python 还不能够直接使用,建议输入下面的指令,将其加入到当前用户的用户变量中。

1 | cd ~ |

在打开的文件中,输入

1 | export PYTHONPATH=/home/pi/caffe/python:$PYTHONPATH |

保存后关闭,然后在命令行下输入

1 | source .bashrc |

打开一个新的终端或同时按住(Ctrl + Alt + T),输入

1 | python |

如果看到如下内容

1 | pi@DeepMind:~$ python |

然后基本就全部安装完拉。接下来大家尽情地跑demo吧~

参考链接

- Ubuntu Installation

- Ubuntu 16.04 or 15.10 Installation Guide

- Caffe + Ubuntu 12.04 64bit + CUDA 6.5 配置说明

- 普兒的技术传送门

- 浙商大嵌入式实验室-凉水煮茶

- 记一个编译问题的解决过程

- caffe的配置过程

- error == cudaSuccess (8 vs. 0)

转载务必注明 来自微记元-Ubuntu 14.04 64bit + Caffe + CUDA 7.5 + Intel MKL 配置说明